Catalog Overview

The AI Models Catalog provides access to a wide range of pre-configured AI models from leading providers. Browse, compare, and use them on demand.

What's Available

Kloud Team's AI Models Catalog offers:

- Self-Hosted Models – Deploy open-source models on your own infrastructure

- Third-Party Models – Access models from Anthropic, OpenAI, xAI, and other providers

- Multiple Model Types – Chat completion, code generation, vision, video, and more

- Transparent Pricing – See token costs for prompts and completions upfront

Accessing the Catalog

Navigate to AI [Machine Learning] > Models > Catalog in the Kloud Team dashboard to browse available models.

Catalog Sections

The Models section includes:

- Catalog – Browse all available AI models

- My Library – View your deployed and saved models

- Playground – Test models before deployment

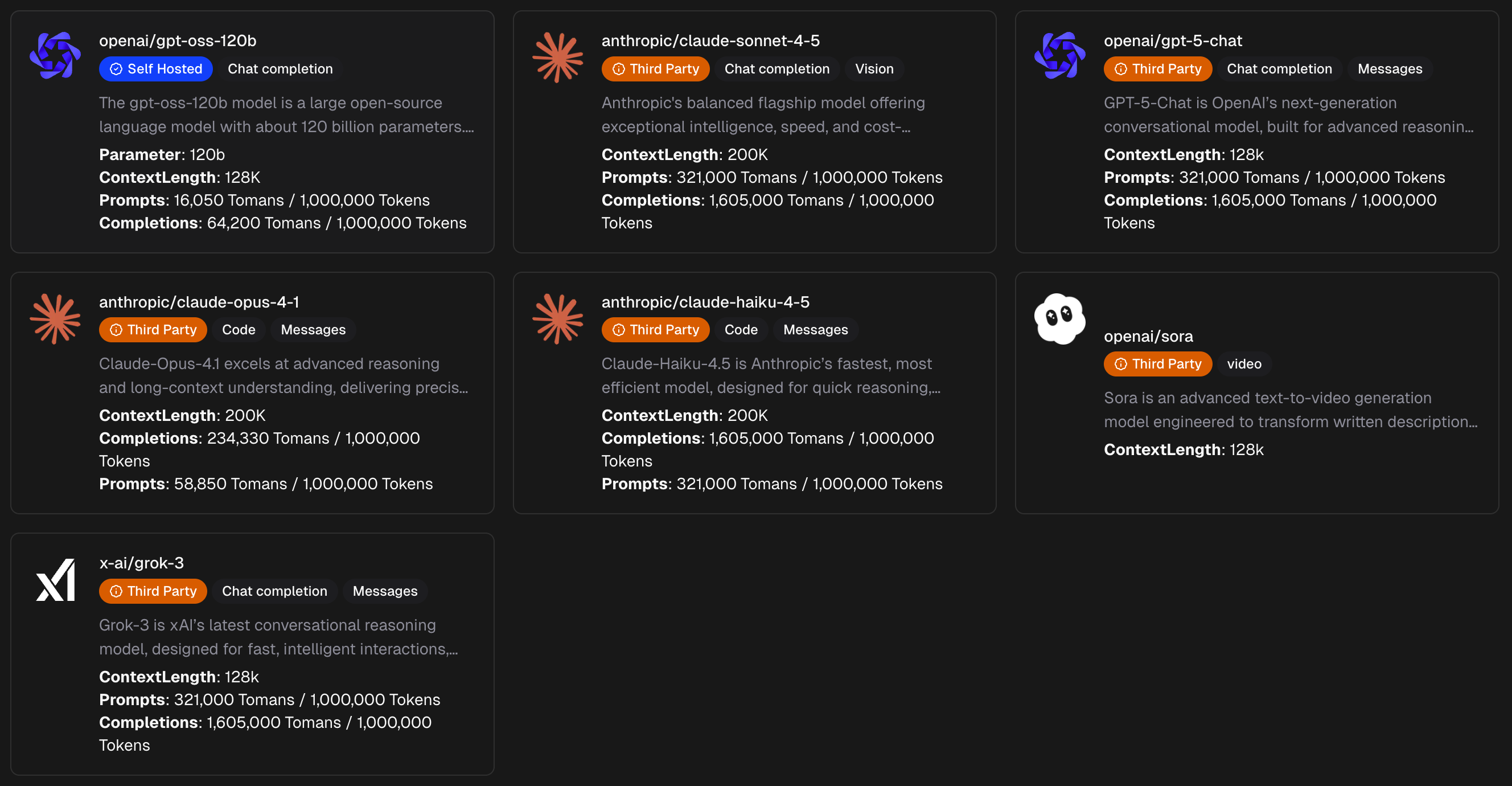

Available Models

Self-Hosted Models

Self-hosted models run on your own Kloud Team infrastructure, giving you full control over data and deployment:

openai/gpt-oss-120b

- Large open-source language model with 120B parameters

- Context Length: 128K tokens

- Prompts: 16,050 Tomans / 1,000,000 Tokens

- Completions: 64,200 Tomans / 1,000,000 Tokens

- Use cases: Chat completion, general AI tasks

Third-Party Models

Third-party models are hosted by the provider and accessed via API:

anthropic/claude-sonnet-4-5

- Anthropic's balanced flagship model

- Context Length: 200K tokens

- Prompts: 321,000 Tomans / 1,000,000 Tokens

- Completions: 1,605,000 Tomans / 1,000,000 Tokens

- Capabilities: Chat completion, vision

- Best for: Advanced reasoning with multimodal inputs

anthropic/claude-opus-4-1

- Advanced reasoning and long-context understanding

- Context Length: 200K tokens

- Prompts: 58,850 Tomans / 1,000,000 Tokens

- Completions: 234,330 Tomans / 1,000,000 Tokens

- Capabilities: Code generation, messages

- Best for: Complex coding tasks and analysis

anthropic/claude-haiku-4-5

- Anthropic's fastest and most efficient model

- Context Length: 200K tokens

- Prompts: 321,000 Tomans / 1,000,000 Tokens

- Completions: 1,605,000 Tomans / 1,000,000 Tokens

- Capabilities: Code generation, messages

- Best for: Quick reasoning and high-throughput tasks

openai/gpt-5-chat

- OpenAI's next-generation conversational model

- Context Length: 128K tokens

- Prompts: 321,000 Tomans / 1,000,000 Tokens

- Completions: 1,605,000 Tomans / 1,000,000 Tokens

- Capabilities: Chat completion, messages

- Best for: Advanced conversational AI

openai/sora

- Advanced text-to-video generation model

- Context Length: 128K tokens

- Capabilities: Video generation

- Best for: Creating videos from text descriptions

x-ai/grok-3

- xAI's latest conversational reasoning model

- Context Length: 128K tokens

- Prompts: 321,000 Tomans / 1,000,000 Tokens

- Completions: 1,605,000 Tomans / 1,000,000 Tokens

- Capabilities: Chat completion, messages

- Best for: Fast, intelligent interactions

Browsing Models

Search and Filter

Use the search bar to find models by name:

Search by model name

Models are organized by provider and capabilities (Chat completion, Code, Vision, Video, Messages).

Review Model Details

Each model card displays:

- Model Name – Provider and model identifier

- Hosting Type – Self Hosted or Third Party

- Capabilities – Supported features (chat, code, vision, etc.)

- Context Length – Maximum token context window

- Prompts Pricing – Cost per 1M input tokens

- Completions Pricing – Cost per 1M output tokens

Compare Models

Compare models based on:

- Context Length – Larger contexts for longer documents

- Pricing – Balance cost vs. performance

- Capabilities – Match features to your use case

- Hosting Type – Self-hosted for data control, third-party for convenience

Model Types and Use Cases

Chat Completion Models

Best for conversational AI, customer support, and general-purpose text generation:

- claude-sonnet-4-5

- claude-haiku-4-5

- gpt-5-chat

- gpt-oss-120b

- grok-3

Code Generation Models

Optimized for programming tasks, code review, and technical documentation:

- claude-opus-4-1

- claude-haiku-4-5

Vision Models

Process and understand images alongside text:

- claude-sonnet-4-5 (multimodal)

Video Models

Generate videos from text descriptions:

- openai/sora

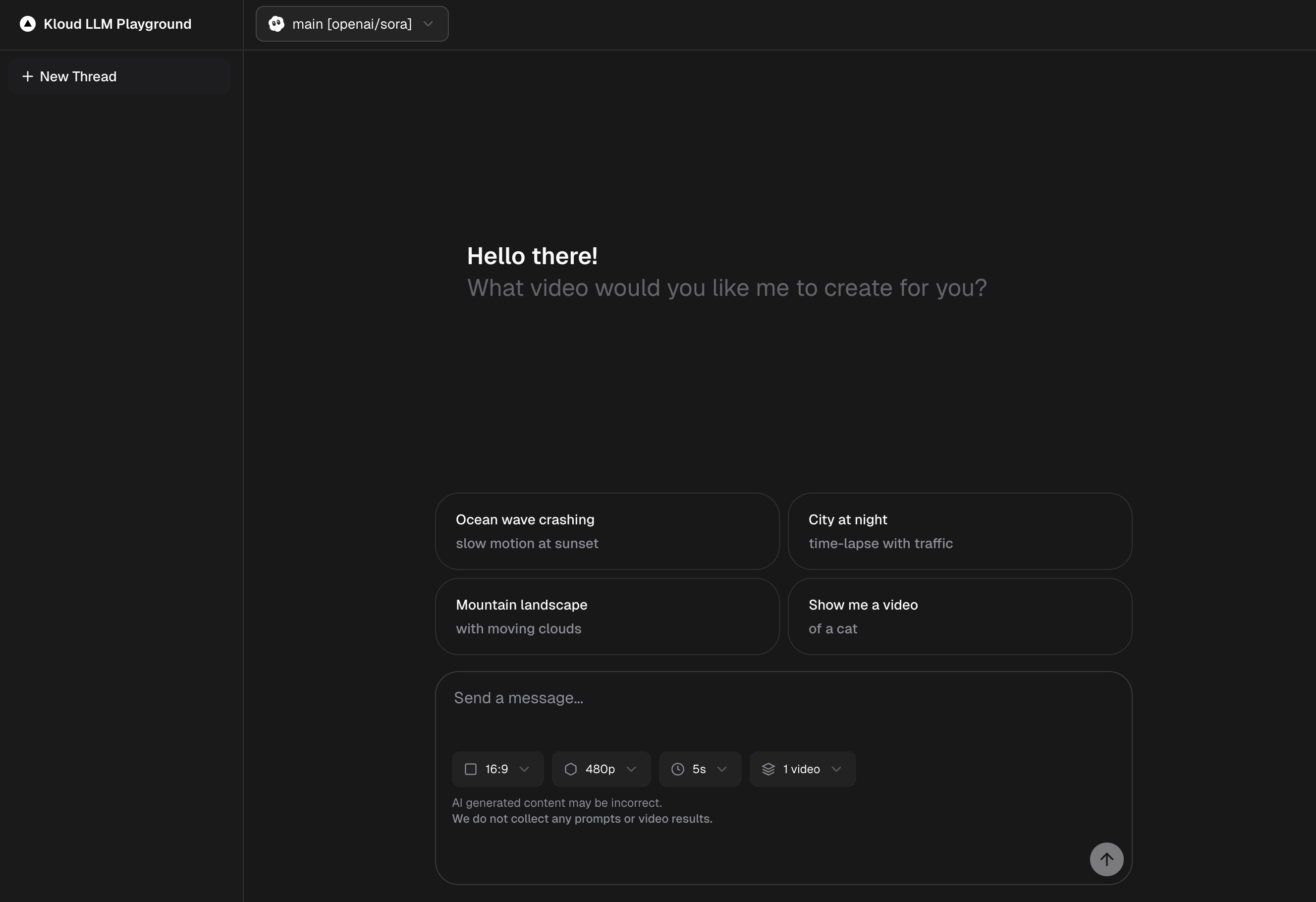

Playground

Test models before deployment in the playground:

Pricing Structure

Model pricing is based on token usage:

- Prompts (Input) – Tokens in your request

- Completions (Output) – Tokens in the model's response

- Pricing Unit – Tomans per 1,000,000 tokens

Cost Optimization:

To minimize costs:

- Use smaller context windows when possible

- Choose efficient models like Haiku for simple tasks

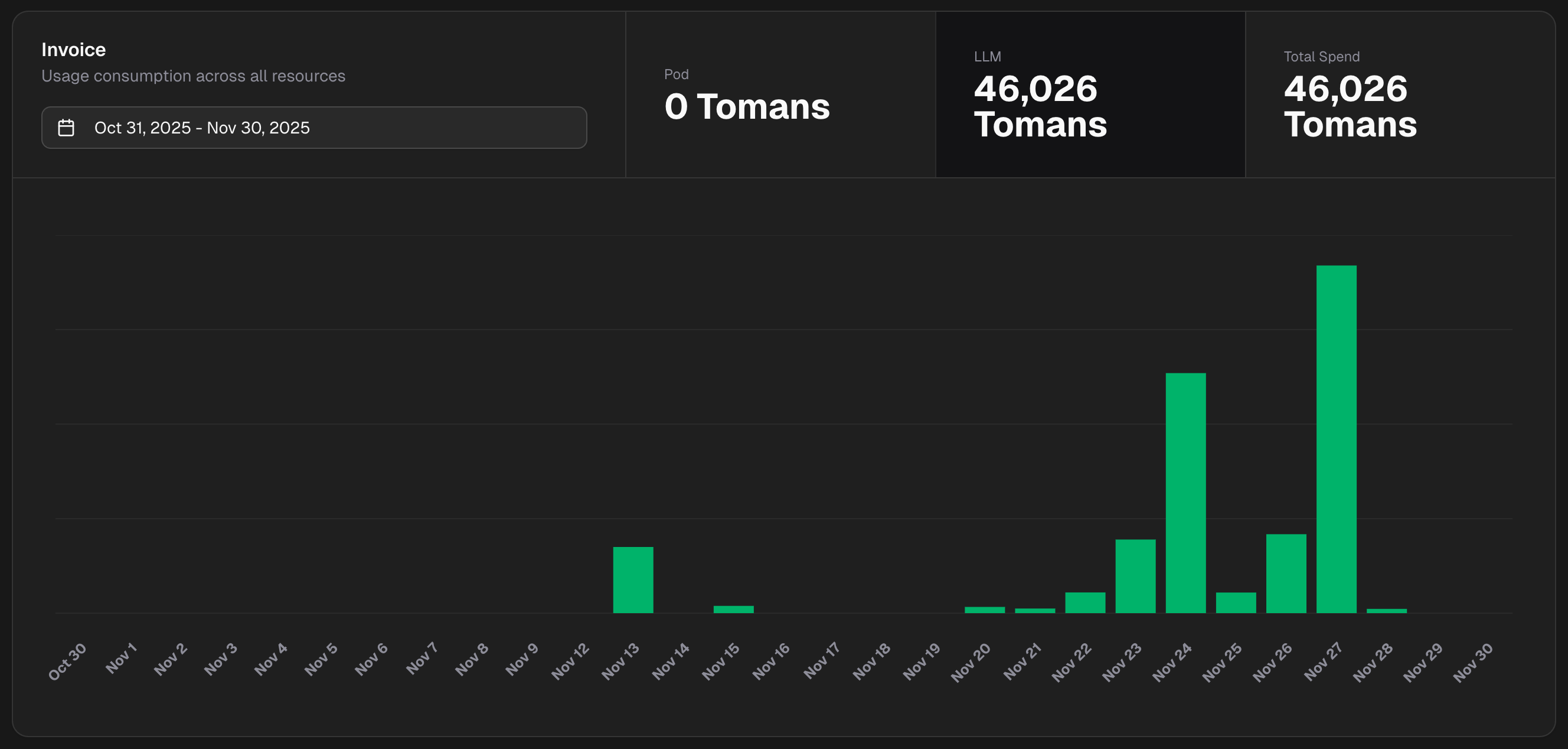

- Monitor llm token usage in the dashboard overview page

Monitoring LLM Tokens Usage